First image description.

Second image description.

Third image description.

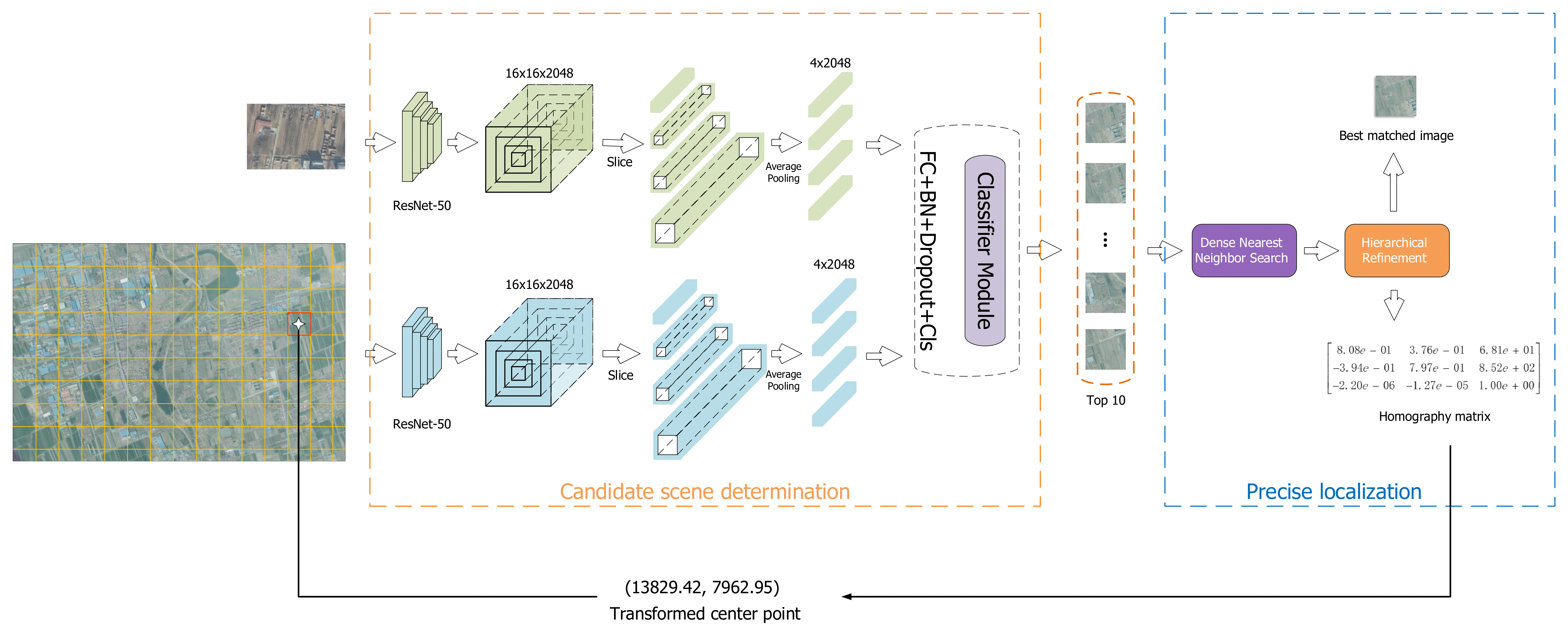

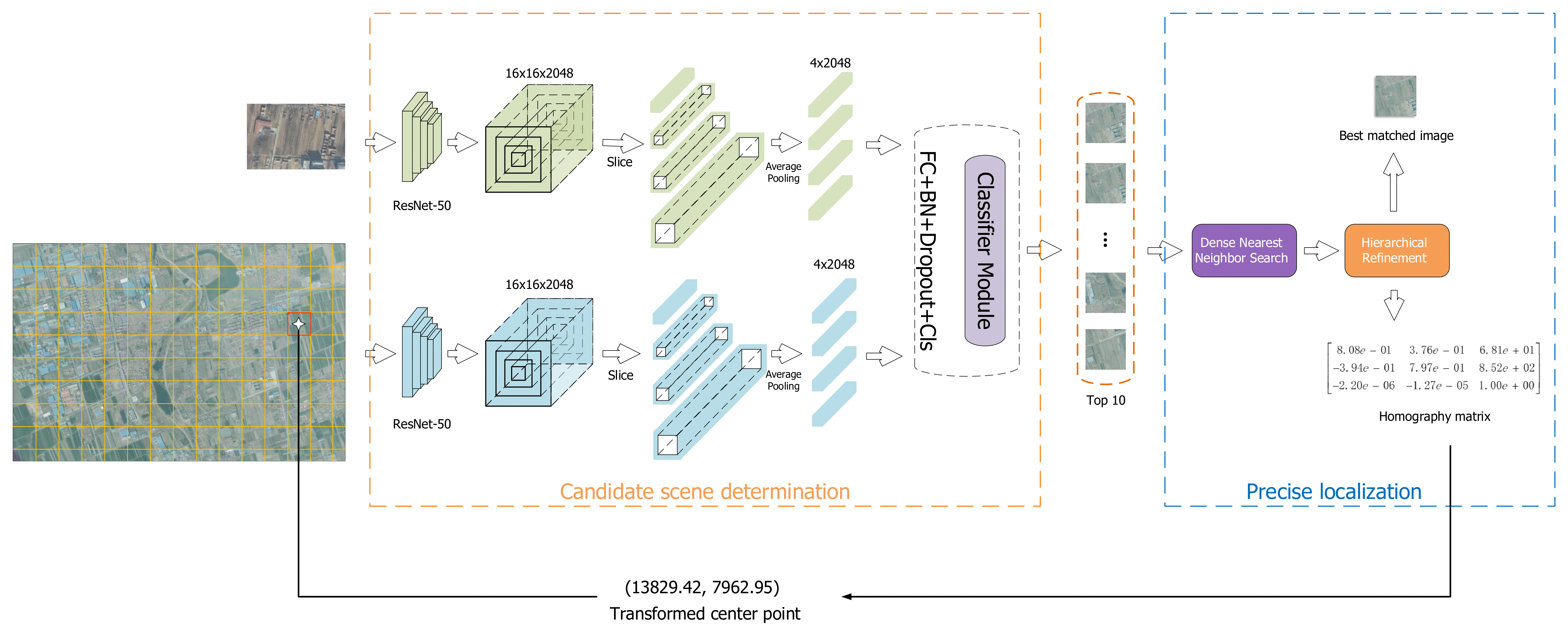

Unmanned Aerial Vehicles (UAVs) geo-localization refers to finding the position of a given aerial image in a large reference satellite image. Due to the large scale and illumination difference between aerial and satellite images, it is challenging that most existing cross-view image matching algorithms fail to localize the UAV images robustly and accurately. To solve the above problem, a novel UAV localization framework containing three-stage coarse-to-fine image matching is proposed. In the first stage, the satellite image is cropped into several local reference images to be matched with the aerial image. Then, ten candidate local images are selected from all of the local reference images with a simple and effective deep learning network, LPN. At last, a deep feature-based matching is employed between candidate local reference images and aerial images to determine the optimal position of the UAV in the reference map via homography transformation. In addition, a satellite-UAV image dataset is proposed, which contains 3 large-scale satellite images and 1909 aerial images. To demonstrate the performance of the proposed method, experiments on the large-scale proposed dataset are conducted. The experimental results illustrate that for more than 80% of the testing pair images, the proposed method is capable of refining the positioning error within 5 pixels, which meets the needs of UAV localization and is superior to other popular methods.

@INPROCEEDINGS{10137193,

author={Luo, Xubo and Tian, Yaolin and Wan, Xue and Xu, Jingzhong and Ke, Tao},

booktitle={2022 International Conference on Service Robotics (ICoSR)},

title={Deep learning based cross-view image matching for UAV geo-localization},

year={2022},

volume={},

number={},

pages={102-106},

keywords={Location awareness;Deep learning;Satellites;Service robots;Image matching;Refining;Lighting;deep learning;image matching;geo-localization;autonomous drone navigation},

doi={10.1109/ICoSR57188.2022.00028}}